An analysis and evaluation of the PNY GeForce RTX 5080 ARGB OC

Graphics have been confined to little pixels from the beginning of computer gaming, but thanks to technical improvements, the scenario has been graphically converted into a vivid reality. This transition was not a gradual process but rather the outcome of technology breakthroughs that caused a qualitative shift in the user experience by improving realism and accuracy in every element of the games.

NVIDIA has emerged as a key actor in this transformation, not only by enhancing graphics quality but also by allowing gamers to enjoy high frame rates on a variety of devices, resulting in a seamless and realistic experience for both pros and regular users.

Since its inception, NVIDIA has strived to push the frontiers of graphics computing, developing technologies that have altered the conceptions of performance and quality with each new generation of its cards. These technologies have helped to create more complex virtual worlds and manage lighting and shadows with remarkable smoothness, as well as include artificial intelligence technologies that have become an essential component of the current graphics experience.

With the release of the GeForce RTX 5080 a few days ago, we saw a fresh quantum leap in the world of graphics. This card, based on the Blackwell architecture, comes with 16 GB of ultra-fast GDDR7 memory, allowing even the most demanding games and creative apps to operate with great quality and performance.

But the main question is what the card will add to your experience: will you feel like the rain in the game is genuinely falling on your face? Or will you be captivated by the nuances of the character's hair blowing in the breeze? And is sheer performance enough to wow us, or is the key concealed in technologies such as DLSS 4, which intelligently redraws missing frames?

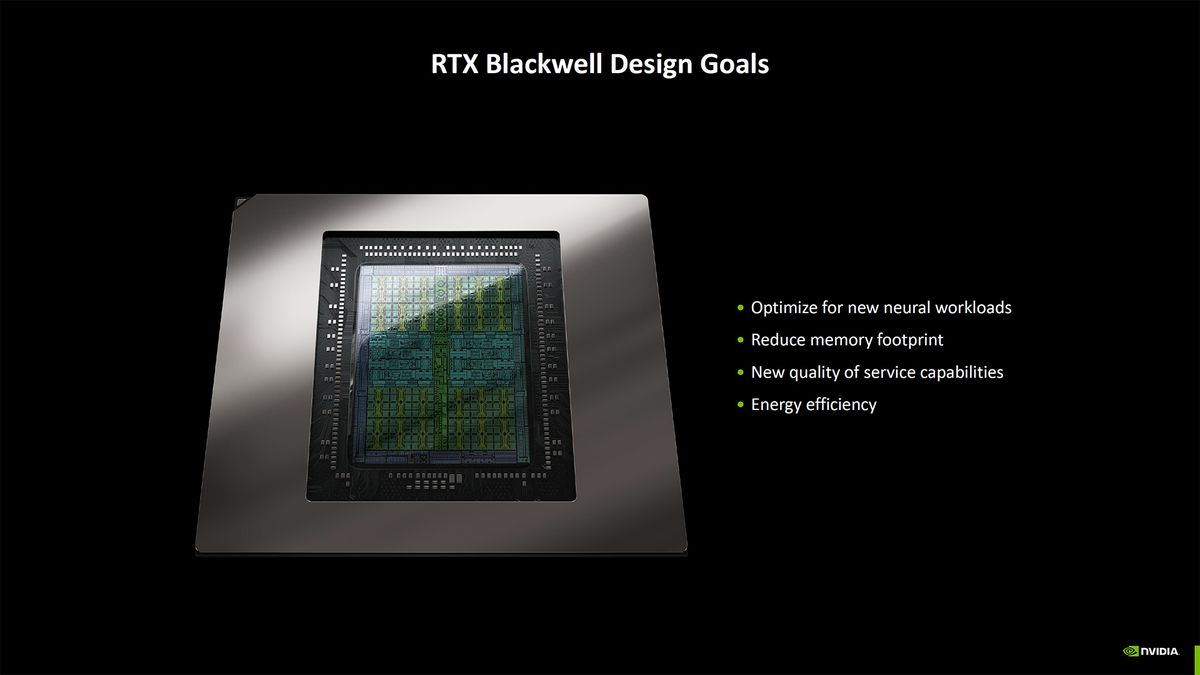

Look at Blackwell's All-New Architecture: AI Takes Over.

Before we get into the specifics of the Blackwell design, let's take a look back at the Ampere architecture, which dominated the graphics processor market at the time, providing gamers, makers, and scientists with unrivaled capability. Despite its grandeur, it had several limits in terms of heat, power consumption, and reliance on an older architecture, which led to the appointment of Blackwell as the new mastermind.

NVIDIA has taken decades of experience and honed its technological history into a sparkling diamond with 92 billion transistors, more than any previous model. This number is more than just a number; each transistor represents a chip's lifeblood, and the more there are, the faster the design can do complicated computations.

One of Blackwell's standout features is its extensive integration with AI technology, which not only improves performance somewhat but also significantly, reaching 3.352 trillion AI operations per second (TOPS). A number that may seem outlandish, yet it is now our reality. This implies that the new architecture is beneficial not just for gamers seeking the greatest frame rates in games, but also as a full factory for processing massive data, deep learning applications, and neural networks.

The most difficult problem in building a new architecture was not just increasing performance but also avoiding turning the GPU into a massive, power-hungry furnace. This is where Blackwell's innovation shines through, with clever solutions based on modern power distribution and heat management techniques. It employs a three-layer design to better dissipate heat, reducing the need for bulky cooling systems, and it relies on advanced CUDA cores to improve power consumption per calculation, as well as improvements at the transistor level to make them more efficient than previous generations.

In other words, Blackwell architecture is more than simply a gain in power; it also increases intelligence and efficiency, delivering higher performance without sacrificing heat levels or power consumption.

Fifth-Generation Tensor Cores: The Backbone of AI

Designed for the present AI age, the 5th Gen Tensor Cores enable FP4 accuracy, allowing them to analyze data and conduct deep learning operations at unparalleled rates. DLSS (Deep Learning Super Sampling) technology has evolved significantly, allowing games to give greater resolutions at quicker frame rates thanks to sophisticated AI, and the capacity to train big models has doubled, making these cards excellent for demanding machine learning workloads.

For those who are unfamiliar with FP4 accuracy, it is basically a method of storing numbers using just four bits, which speeds up calculations and consumes less power. Consider utilizing a "shortened version" of numbers. Instead of writing out a whole number with all of its information, you use an abbreviated version. This method uses less information to represent the number, allowing for faster calculations and lower power consumption. While the result is slightly less accurate than full versions (such as FP16 or FP32), it is sufficient for applications that value speed and efficiency, such as artificial intelligence and machine learning, where some accuracy can be traded off for better performance.

How will this affect you? Consider working on a huge project that involves training an AI model to handle millions of photos. Previous generations required hours, if not days, of waiting. Now, with the new Tensor cores, jobs can be completed in less time and with less power, making AI quicker and more efficient than ever. In games, DLSS 4.0 operates fluidly, intelligently producing frames without sacrificing quality, resulting in a smooth visual experience even in the most complex situations.

Fourth Generation RT Cores: A Revolution in Ray Tracing.

The fourth generation of RT cores provides faster and smarter performance. If you thought Ampere was the pinnacle of ray tracing, Blackwell has gone above and beyond. Ray tracing performance has doubled compared to the previous generation, making lighting, reflections, and shadows more lifelike than ever before; and with the fourth generation, you can play games at maximum settings without experiencing frame rate dips, even in complex scenes.

GDDR7 Memory: Maximum Speed.

After NVIDIA demonstrated the strength of the new cores, it's time to discuss GDDR7 memory, which is the lifeblood of the Blackwell architecture. The data transmission speed (VRAM speed) is 30 gigabits per second, significantly faster than the preceding GDDR6X. The bandwidth (bandwidth) also reaches 1.8 terabytes per second, allowing for unparalleled data transfer speeds. It not only improves power efficiency but also assures that the cards perform consistently even under harsh conditions.

If you don't know what memory speed is, let me explain. Imagine you're working on an 8K scene in 3D software; every movement demands a massive amount of data. Traditional memory may cause slowdowns when modifying the scene. With GDDR7, everything happens practically instantly, with no bottlenecks impeding data flow; in games, there will be no stuttering or latency, even in open landscapes that demand frequent data loading.

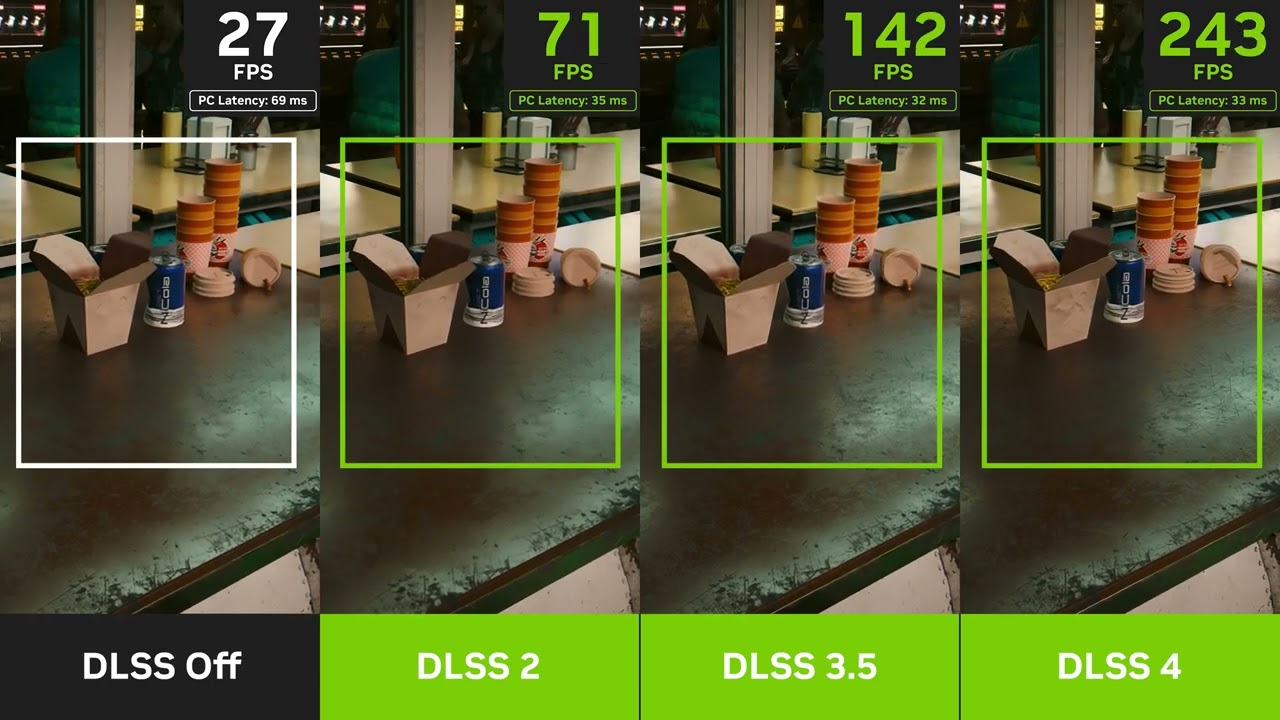

DLSS: From simple ideas to quantum leaps.

The fundamental objective of computer graphics designers has always been to develop ways to offer more realistic and smooth visuals without losing performance, and the technique DLSS (Deep Learning Super Sampling) represents the first step in this direction. Imagine if we could take a low-resolution scene and run it through a carefully trained neural network to transform it into a high-resolution picture without sacrificing any small details or quality. This was the challenge that DLSS was able to meet, and with time and successive updates, this technology was able to generate complete frames and comprehend the composition of the scene in its entirety, from shadows to reflections and even the details of the occlusion that appear when elements overlap.

Neural rendering is more than a technology.

What distinguishes neural rendering today is not just its capacity to increase performance but also its ability to provide unparalleled visual experiences. When we talk about technologies like DLSS 4, we're talking about a quantum leap that goes beyond simply boosting picture quality; it's a technology that makes graphics more interactive and immerses gamers and users in a digital environment that resembles reality down to the last detail.

In this regard, Multi Frame Generation serves as a striking illustration. This technique increases the number of frames shown by up to 8 times over previous methods, resulting in unrivaled smoothness, a more dynamic gaming experience, and faster reaction to player inputs. Imagine you're playing a game that needs a fast response time and every movement and detail is rendered with amazing smoothness; this is what contemporary neural rendering technologies offer.

How does neural rendering work?

Looking behind the scenes, the incorporation of neural networks into the rendering process has created new opportunities for picture quality enhancement. Neural shaders have been created to compress and improve data in incredible ways. These shaders are more than simply tools for reducing texture sizes and saving memory; they also aid in the creation of intricate lighting effects and breathtaking cinematic textures, giving games and movies an unprecedented level of realism.

One of the most important advancements in this field is "RTX Neural Faces," which is a novel technique to improve the quality of digital faces via artificial intelligence. Instead of using standard rendering methods, the system takes a simplified picture of a face and 3D data, then reconstructs it using a generative AI model to make it more natural and lifelike. This sort of innovation enables characters in games and movies to appear more alive and communicate their emotions more effectively, allowing users to fully immerse themselves in the narrative.

DLSS 4: Faster Framerates and Better Quality?!

The innovation journey does not end here; we have now arrived at DLSS 4, the next great thing. By integrating multi-frame creation, ray tracing, and powerful AI, we can now create 4K visuals at an ultra-high refresh rate while lowering memory usage and considerably improving efficiency. At the core of this technology is the Transformer neural model, which has transformed AI by understanding and evaluating the relevance of every pixel in a picture, whether at the level of a single image or over numerous frames.

This new approach is more than simply a technological enhancement; it fundamentally alters how we perceive visual information. It eliminates ghosting and enhances visual stability, even during the game's most frenetic moments. As a consequence, tough situations with complicated lighting or quick motions become clearer and more detailed, enhancing the gaming and viewing experience.

Is DLSS 4 compatible with every game?

One of the most difficult difficulties when releasing a new technology is guaranteeing compatibility with existing systems and applications. NVIDIA recognizes this; thus, both Multi Frame Generation and the new Transformers-based models are designed to work with current DLSS integrations. This means that existing developers and users will not encounter compatibility concerns and will benefit from better performance without the need for major game and application upgrades.

Now that the GeForce RTX 50 series has been available for a few days, players will be able to experience a significant increase in performance owing to technologies supported in over 75 games and apps.

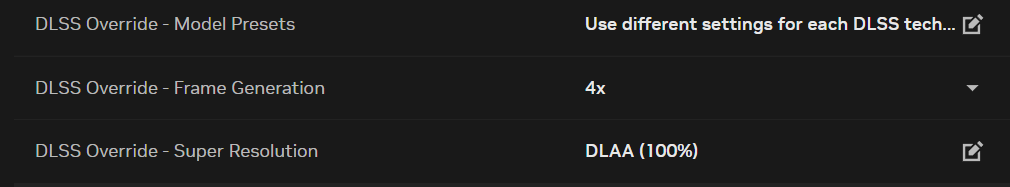

Don't worry if your favorite game isn't supported; older games and applications may not support the most recent DLSS technology. However, NVIDIA has discovered a solution with the DLSS Overrides function, which is now available through the new NVIDIA app. After downloading the GeForce Game Ready Driver and upgrading the program, users may access the DLSS Overrides options in the Graphics > Program options area, which include:

DLSS Override for Frame Generation: Allows GeForce RTX 50 Series users to employ Multi Frame Generation technology when Frame Generation is activated in-game.

DLSS Override for Model Presets: Enables the newest Frame Generation model for GeForce RTX 50/40 Series users, as well as Transformer modeling for both Super Resolution and Ray Reconstruction for all GeForce RTX users when DLSS is turned on in-game.

DLSS Override for Super Resolution: Changes the internal display resolution for DLSS Super Resolution, allowing DLAA or Ultra Performance Mode to be enabled while Super Resolution is activated in the game.

With these new capabilities, it is now quite simple to improve the graphic performance of games. With the NVIDIA app, users can effortlessly update and adjust their game settings with a few clicks, allowing them to enjoy a sophisticated visual experience without hassle.

Technical information for the PNY GeForce RTX 5080 ARGB OC graphics card

Chip number: GB203.

Memory type: GDDR7.

Total number of transistors: 45.6 billion.

Manufacturing technology: 5 nm.

CUDA cores: 10,752.

Tensor Cores: 5th Generation, 1801 AI cores.

RT Cores: 4th generation, 171 cores.

VRAM speed: 30 Gbps.

VRAM capacity: 16 GB.

Memory interface: 256 bits.

Bandwidth: 960 Gbps.

Base frequency is 2.30 GHz.

Maximum frequency is 2.78 GHz.

Power consumption: 360 watts.

The RTX 5080 employs innovative manufacturing technology based on the 5nm GB203 chip, allowing it to cram 45.6 billion transistors into a smaller footprint while providing higher power efficiency. In addition to upgrading to PCIe 5, which offers significantly better data transfer speeds than the PCIe 4 standards utilized in the RTX 4080. This demonstrates a tremendous boost in data processing and graphics capability.

The RTX 5080 has 10,752 CUDA cores to boost graphics performance, while the 1,801 5th Gen Tensor Cores improve AI and deep learning applications, outperforming the RTX 4080 in terms of AI technology integration. The RTX 5080's 171 4th Gen RT Cores also help to increase ray tracing and provide more realistic lighting and shadows than the RTX 4080's technology.

The RTX 5080 is powered by 16GB of GDDR7 memory operating at 30Gbps and a 256-bit interface, allowing data transmission speeds of up to 960GB/s, exceeding the RTX 4080, which has a slower memory type and speed. The RTX 5080 has a base frequency of 2.30GHz and can reach 2.78GHz, resulting in quicker performance and greater dynamic responsiveness than the RTX 4080.

In terms of power consumption, the RTX 5080 consumes roughly 360W, demonstrating a balance between high power and thermal efficiency, whilst the RTX 4080 consumes somewhat less power, albeit at the sacrifice of performance in graphics-intensive scenarios. These distinctions between the two cards emphasize how the RTX 5080 is a step ahead due to contemporary manufacturing processes, the number of transistors, and specialized cores, making it the best choice for applications requiring outstanding graphics capability and artificial intelligence.

PNY GeForce RTX 5080 ARGB OC Technical Testing Session

Design:

When it comes to current graphics cards, the PNY GeForce RTX 5080 ARGB OC is more than just a technical component; it's an immersive visual experience that strikes a balance between physical force and beautiful beauty. With its futuristic design and reactive lighting, this card demonstrates that it is not just a performance booster but also an aesthetic component that adds to the beauty of any professional build.

The card has the Infinity ARGB design, which PNY created to represent a balance of beauty and technology. At first glance, you'll note how the ARGB lighting combines flawlessly with the design features, giving the card a dynamic personality that transforms it into a moving work of art within the box. The lighting is more than simply an aesthetic touch; it interacts with the device in a variety of ways, giving the user the ability to adjust the colors and effects to match his personality and style.

The integrated illumination extends throughout the frame surrounding the triple fans, giving a gradient effect that enhances the card's presence. This effect also extends to the backplate, which has a stunning backlight that transforms the metal plate into a shining piece of futuristic technology. This distinctive design not only provides the card a luxury appearance, but it also mixes in smoothly with the rest of the build, especially if you have a transparent case that enables the lights to be presented to their full potential.

The card is 329 mm long, 138 mm wide, and 71 mm high, making it a triple-slot card that will stand out in any gaming build. Its aggressive style and elegant geometric lines make it stand out within the box, especially when combined with ARGB-enabled components, which transform the entire device into a dazzling light display.

From the front, the card's triple fans encircled by ARGB lights stand out, giving it a strong yet stylish appearance. From the rear, the metal backplate is more than simply an attractive feature; it also helps to optimize cooling and heat distribution, and the embedded lighting adds a modern touch that makes it appear to be throbbing with energy.

Ports:

The PNY GeForce RTX 5080 ARGB OC features a contemporary port array that assures broad compatibility with the most recent monitors and 4K resolution technologies. The card has one HDMI 2.1b connector and three DisplayPort 2.1b connections, allowing for seamless gameplay at 4K resolution at up to 480Hz or 8K at up to 165Hz with DSC (Display Stream Compression) technology. This results in an immersive visual experience with high frame rates and excellent image quality.

Cooling:

Despite the emphasis on aesthetics, PNY did not overlook one of the most crucial features of strong graphics cards: an excellent cooling system that ensures consistent performance even under heavy loads. This system is built on a triple fan design, with modified fan blades to boost airflow and air pressure, resulting in increased cooling efficiency.

In addition, counter-rotating fan technology is used, in which the outside fans move in the opposite direction as the main fan, decreasing air interference and increasing airflow dynamics, hence boosting thermal performance. When temperatures fall below 50°C, the cooling system automatically shuts down, minimizing power consumption and noise. This provides a quiet and efficient user experience while maintaining optimal card temperatures.

Energy:

To achieve optimal efficiency, the card requires a single 16-pin power connector, with a 1x16 to 3x8-pin adapter included for compatibility with various power supplies. A power supply of at least 850W is recommended for system stability, particularly while operating high-performance apps and games. The card features a PCI-Express 5.0 x16 interface, which delivers double the bandwidth of the previous generation, resulting in faster response and more efficient data transfer between the card and the rest of the system components.

Performance tests:

It didn't make sense to leave a card this strong without pushing its limitations, so we pushed it above 2775 MHz to discover what it was capable of. To guarantee a thorough study, we conducted the tests on two separate machines—one with high-end hardware for optimum performance and another with lesser specs to evaluate how the card would perform in diverse situations and scenarios. The testing covered a variety of demanding applications and the most recent games, allowing us to evaluate performance in all conditions, whether under intense stress or in routine use, to provide a comprehensive experience for all users.

Specifications of the instrument used to test the card in all tests:

Motherboard model: ROG Z890 Maximus Hero.

Processor: Intel Core Ultra 9 285K.

Cooler: Ryujin III 360 Extreme.

RAM: Kingston Fury DDR5 16GB x 2.

Power supply: TUF 1000W Gold.

The minimal device specs, given that it is a PCIe 4, and we conducted some testing on it:

Motherboard: ASUS Prime B760M-K.

Processor: Intel Core i5-12400F.

Memory: Corsair Vengeance DDR5 5200 MT/s CL40 16 GB.

Power Supply: XPG Core Reactor II VE 750W Gold.

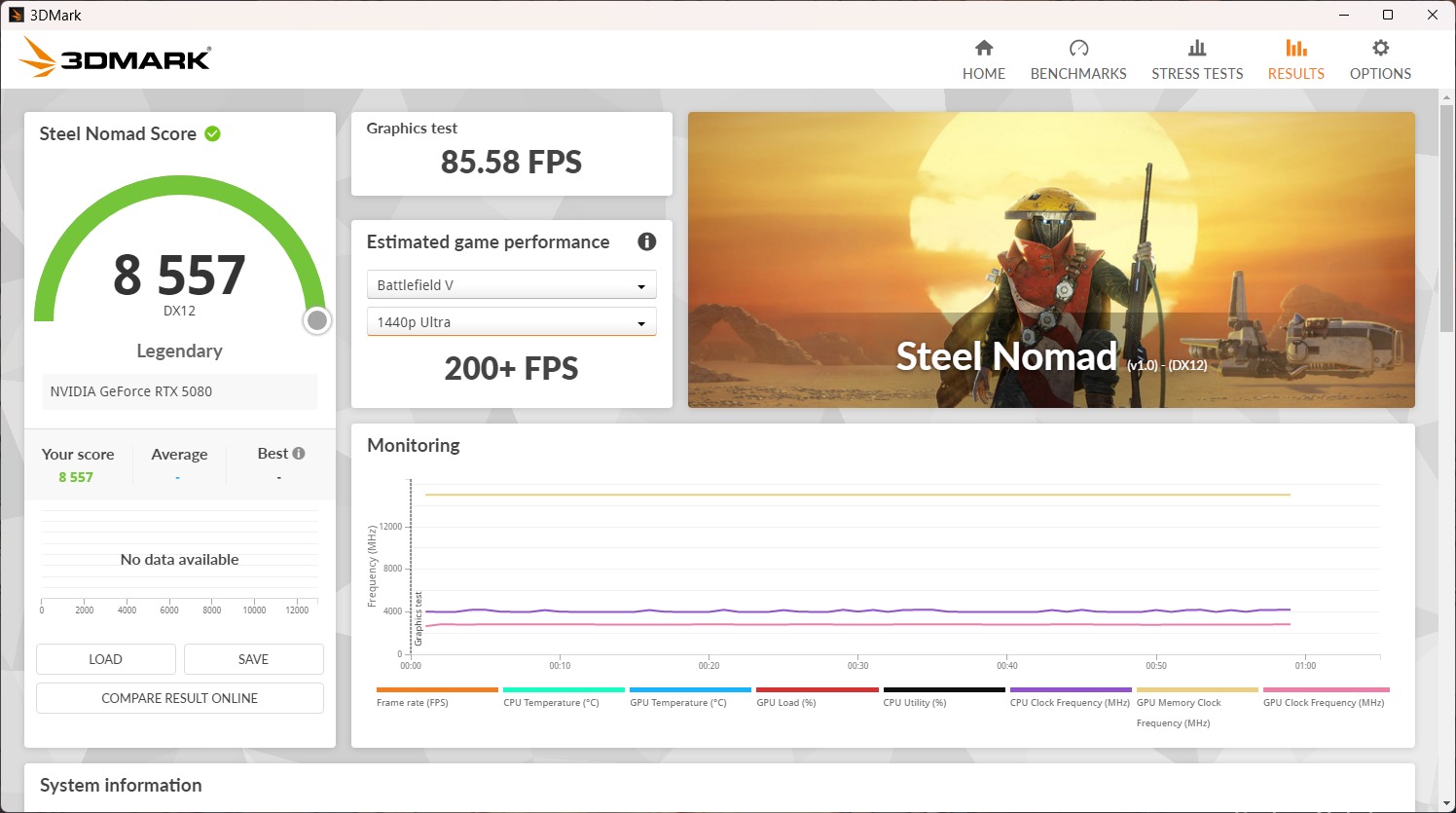

3DMARK

Steel Nomad Score

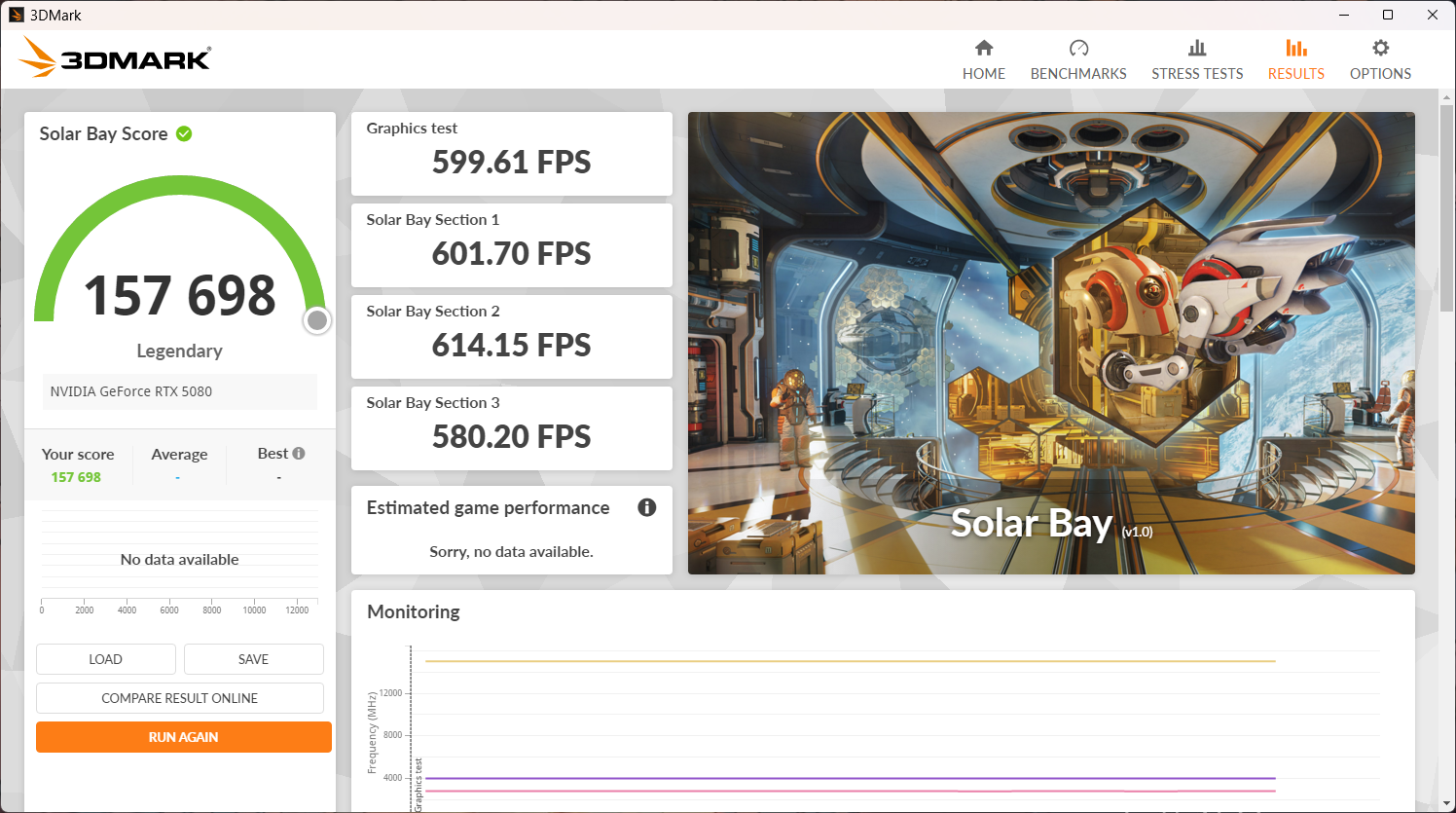

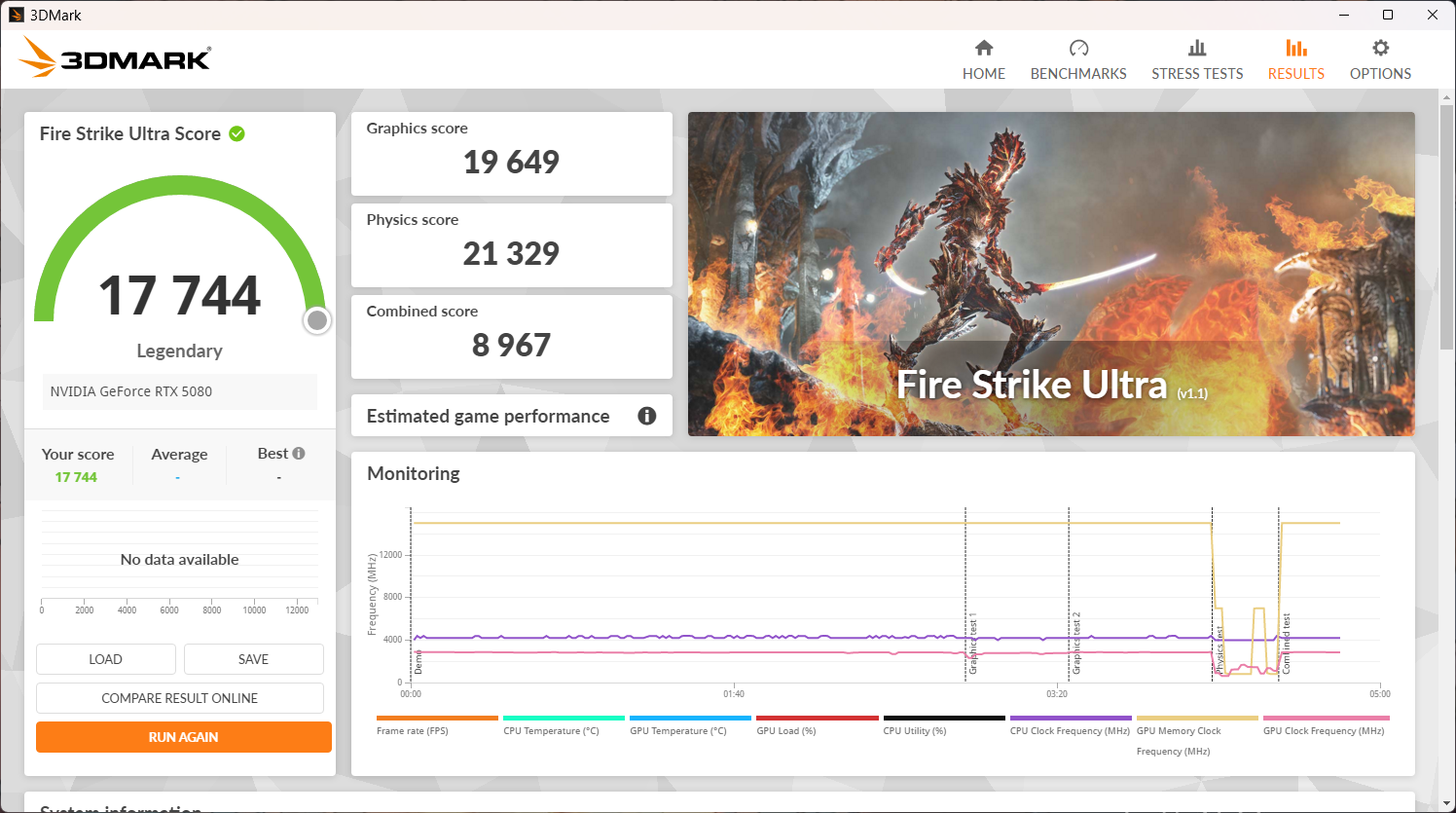

Solar Bay Score

Fire Strike Ultra Score.

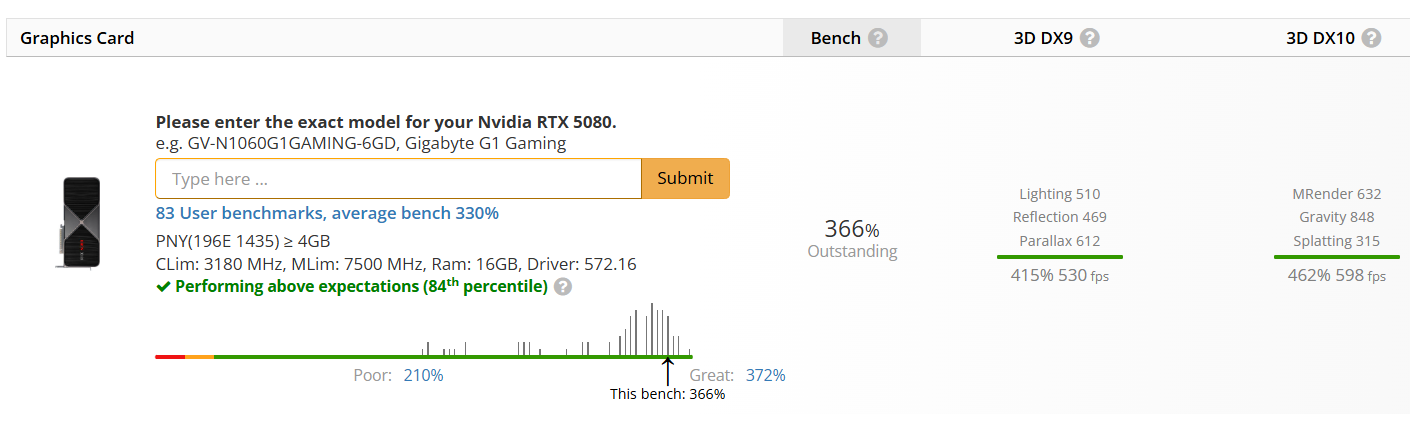

UserBenchmark

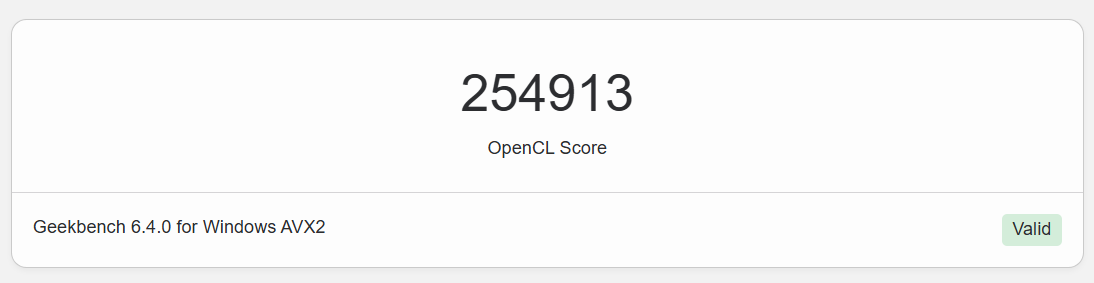

Geekbench

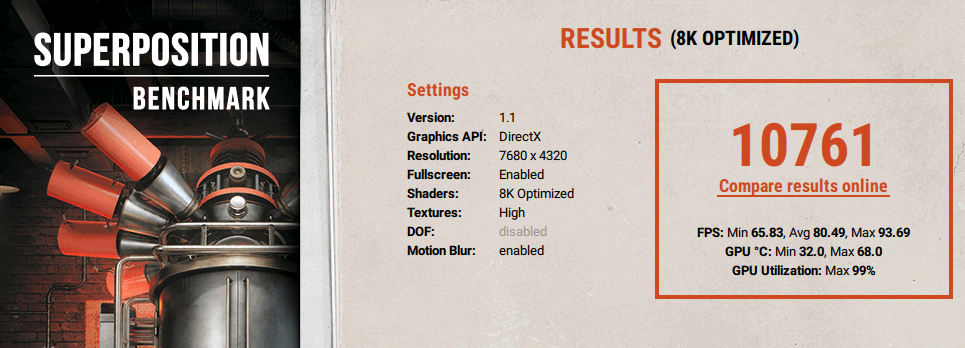

Superposition Benchmark

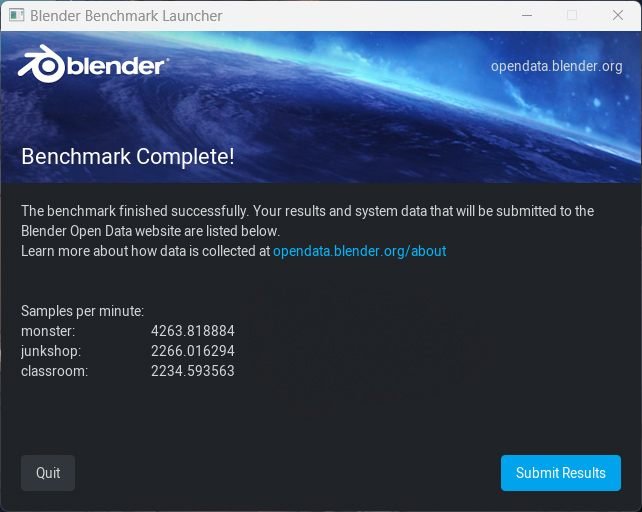

Blender Benchmark

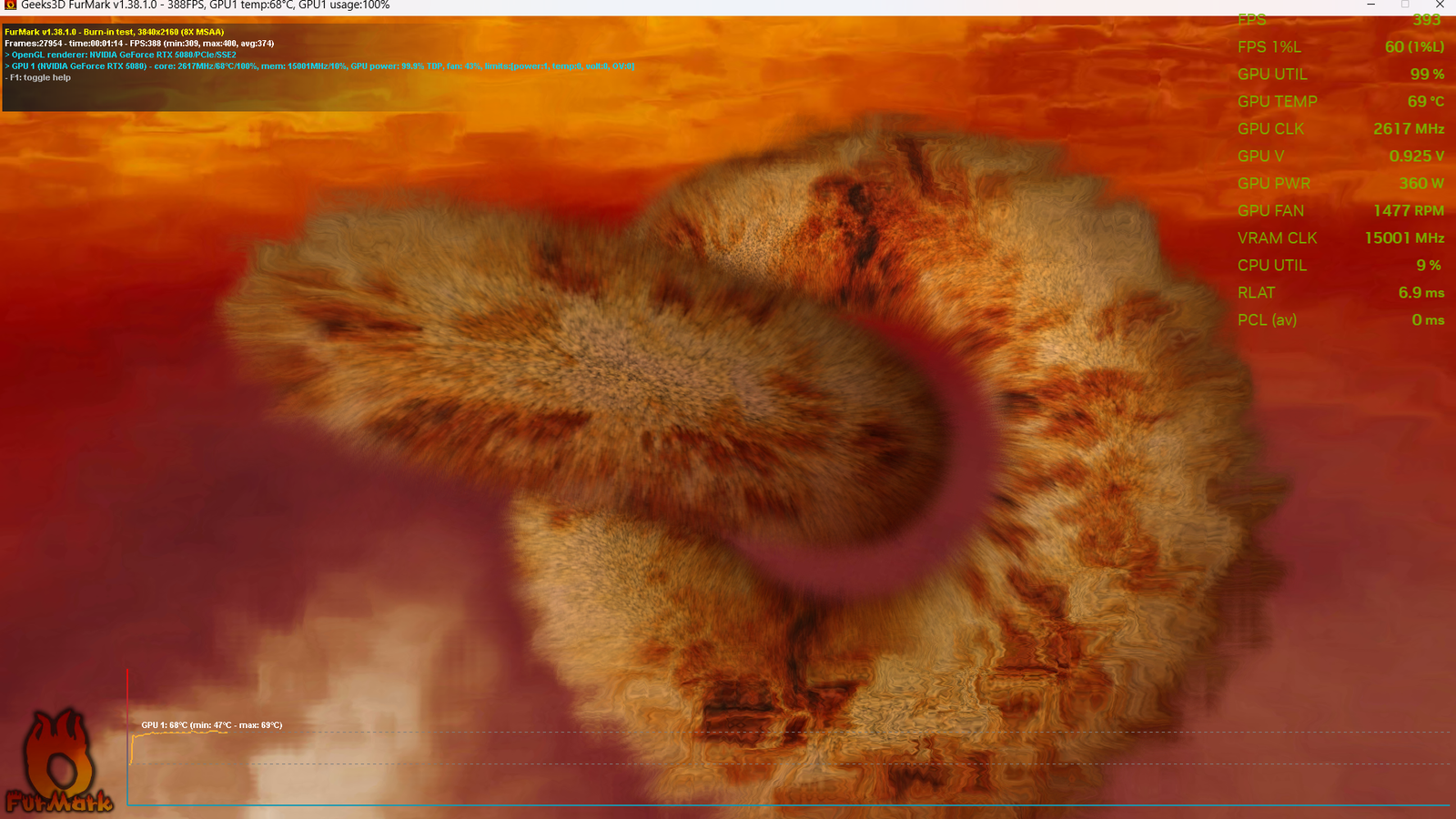

Furmark

After all these tough tests and after pushing it to its limits, I finally decided to throw the card into hell to see if it would survive the extreme heat or turn 4K resolution. The torture journey began with Furmark running at 4K resolution, where I pushed it to its maximum possible frequencies, reaching 2600 MHz and 2820 MHz, trying to force it to show any signs of weakness; however, despite the intense pressure, the card remained steadfast, maintaining a stable temperature.

Game tests:

Cyberpunk 2077

Marvel Rivals

Marvel Rivals was tested at maximum settings and gave outstanding performance across all resolutions, particularly when DLSS 4 and frame generation were enabled.

4K resolution

The game performed well at 74 frames per second in 4K resolution. 4K native mode. With DLSS 4 quality with 4x MFG enabled, performance improved dramatically to 275 fps. Switching to DLSS 4 Performance + 4x MFG increased performance to 320 fps, delivering an extremely smooth experience.

2k resolution

At 2K Native, the game achieved 130 frames per second. With DLSS 4 Quality + 4x MFG enabled, performance jumped dramatically to 454 fps, while DLSS 4 Performance + 4x MFG achieved an amazing 507 fps.

FHD resolution

The game performed smoothly at 202 FPS when played at its original FHD resolution. When 4x MFG was enabled, performance increased to 580 FPS, providing an extremely smooth experience even in the most congested scenarios.

Alan Wake II

Alan Wake II was tested on Max Settings with Path Tracing at 4K and demonstrated varied performance between native resolution, DLSS Quality, and 4x MFG.

4K resolution

The game's performance was in 4K resolution. 7 fps when played at 4K native due to the high route tracing requirements. With DLSS Quality + 4x MFG enabled, performance increased dramatically to 150 fps, making the experience considerably smoother and more responsive.

Hogwarts Legacy

Hogwarts Legacy was tested at maximum settings with ray tracing at 4K and performed admirably, with considerable increases when DLSS was enabled.

4K resolution

At 4K Native, the game captured 51 frames per second, which is acceptable but may not be optimal for dramatic sequences. With DLSS Quality + 4x MFG enabled, performance increased to 164 fps, resulting in a smooth gaming experience with ray tracing.

Star Wars Outlaws

Star Wars Outlaws was tested at maximum settings at 4K with ray tracing, and while the game initially performed poorly at native resolution, DLSS technology substantially improved performance.

4K resolution

When running the game in 4K native, performance was poor at 24 fps, which might affect the overall smoothness of the experience. However, when DLSS Quality + 4x MFG was activated, performance increased to 105 fps, making it far more enjoyable.

Experience summary

In my perspective, after conducting all of the tests, the RTX 5080's raw performance is unimpressive. It turns out that it depends nearly totally on AI approaches, which is understandable given the architecture employed, but NVIDIA was intended to deliver a considerable gain in raw performance rather than relying solely on AI.

My experience with DLSS 4 was wonderful, especially once the ghosting issue was resolved, which was a significant improvement. However, when I activated the 4x setting, various difficulties arose, including visual distortions and strangely shaking parts. These difficulties were notably noticeable when using MFG to increase the frame rate from 20 to 80 frames per second, as predicted, given that 40 of these frames are bogus and just 20 are real. However, at higher frame rates, I had no severe difficulties, and the reaction latency was very invisible while using 4x.

I tested the card on a system with a PCIe 4.0 slot and a low-end processor, despite the fact that the card is supposed to work with PCIe 5.0 and powerful processors. Surprisingly, I saw no bottlenecks or performance difficulties when utilizing an older PCIe interface. On the contrary, the performance was extremely close, with a difference of no more than 2-3 frames per second, and occasionally there was no discernible difference at all. This demonstrates that NVIDIA's architectural enhancements were ideal for maximizing the card's power without relying on the processor. In terms of gaming performance, PCIe 5.0 may not have a substantial influence, or at least it did not make a visible difference in my experience.

Personal viewpoint

As for why the corporation is depending on AI technology rather than enhancing raw performance, I believe there are various reasons for this approach. On the one hand, the introduction of AI technologies such as DLSS improves visual performance without requiring a major increase in hardware, lowering manufacturing costs and maintaining energy efficiency. Furthermore, present market demands have shifted toward a smoother and more realistic gaming experience, and AI helps to achieve this by boosting visual quality and adding calculated frames without dramatically reducing actual performance.

NVIDIA's reliance on artificial intelligence also provides a technological competitive edge, allowing it to produce innovative technologies that stay up with future requirements, even if raw performance falls short. Large corporations typically plan for the distant future, and investing in artificial intelligence appears to be a strategic bet, as these technologies are expected to see continuous improvement, contributing to higher overall performance and making products more compatible with advances in the field of games and applications.

However, with this apparent trend toward more dependence on AI technologies such as DLSS, an important issue arises: Are we on the verge of abandoning raw performance and boosting game performance in the conventional way? It is clear that the development of hardware has become primarily focused on improving efficiency rather than increasing raw power, as companies rely on artificial intelligence to compensate for the lack of actual performance by rebuilding frames and raising the resolution in intelligent ways. Although these technologies have proven to be very effective, this may mean that the future of graphics processor development will not be based on increasing actual power, but

Is this a negative thing? The response is subjective and varies depending on the player. Some believe that these methods provide a seamless gaming experience with good visual quality, even on hardware without superpowers. Others argue that this move is a deception, as better performance is promised theoretically but not based on the card's actual power. The issue becomes clear when we see that performance without these technologies has not improved noticeably, which may imply that players will be forced to enable DLSS or similar technologies in order to achieve acceptable performance in the future, rather than relying on hardware power as in previous generations.

As a result, this generation might be a watershed moment in the graphics card business, with typical advances in raw performance becoming less significant and a greater dependence on AI to compensate for any shortcomings. The key question is whether these solutions will continue to be successful and capable of providing a consistent experience without compromise in the long run. This is what the future will disclose.

So, while the RTX 5080's raw performance is clearly lacking, the application of AI technology offers practical ways to improve the user experience while balancing performance, efficiency, and cost. Despite some of the flaws I found, I am optimistic that future versions will bring significant increases in raw performance, as well as the continuous development of AI technology.

Where can I purchase the card?

If you want to buy an RTX 5080 card, you may do so from the Geekay shop.

Design—9

Manufacturing materials—8

Performance—7

Cooling—8

Overclocking—8

8

Distinguished

The PNY GeForce RTX 5080 ARGB OC card offers a balanced experience between performance and modern AI technologies. Although the raw performance may not be at the expected level, DLSS 4 and MFG technologies compensate for this deficiency, but at a "cost."