What's AI Ethics? | Definition from TechTarget

AI ethics is a system of ethical rules and strategies supposed to tell the event and accountable use of synthetic intelligence know-how. As AI has turn out to be integral to services, organizations are additionally beginning to develop AI codes of ethics.

An AI code of ethics, additionally referred to as an AI worth platform, is a coverage assertion that formally defines the position of synthetic intelligence because it applies to the event and well-being of people. The aim of an AI code of ethics is to offer stakeholders with steering when confronted with an moral choice relating to using synthetic intelligence.

Isaac Asimov, a science fiction author, foresaw the potential risks of autonomous AI brokers in 1942, lengthy earlier than the event of AI programs. He created the Three Legal guidelines of Robotics as a way of limiting these dangers. In Asimov's code of ethics, the primary regulation forbids robots from actively harming people or permitting hurt to come back to people by refusing to behave. The second regulation orders robots to obey people except the orders aren't in accordance with the primary regulation. The third regulation orders robots to guard themselves insofar as doing so is in accordance with the primary two legal guidelines.

Right now, the potential risks of AI programs embrace AI changing human jobs, AI hallucinations, deepfakes and AI bias. These points might be liable for human layoffs, deceptive info, falsely mimicking others' photos or voices, or prejudiced selections that would negatively have an effect on human lives.

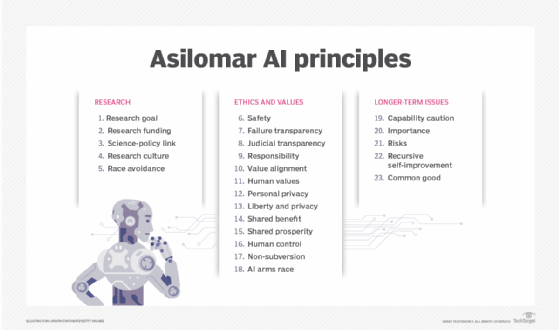

The speedy development of AI previously 10 years has spurred teams of consultants to develop safeguards for shielding in opposition to the chance of AI to people. One such group is the nonprofit Way forward for Life Institute based by Massachusetts Institute of Know-how cosmologist Max Tegmark, Skype co-founder Jaan Tallinn and Google DeepMind analysis scientist Victoria Krakovna. The institute labored with AI researchers and builders, in addition to students from many disciplines, to create the 23 tips now known as the Asilomar AI Ideas.

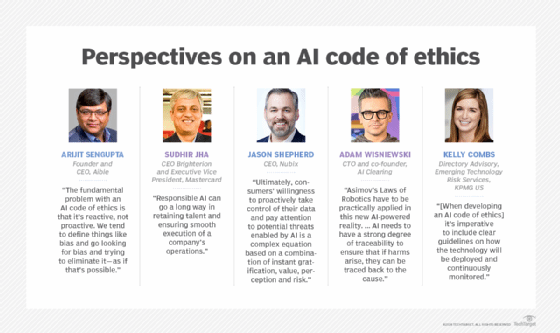

Kelly Combs, director advisory of Rising Know-how Danger Providers for KPMG US, mentioned that, when creating an AI code of ethics, "it is crucial to incorporate clear tips on how the know-how shall be deployed and constantly monitored." These insurance policies ought to mandate measures that guard in opposition to unintended bias in machine studying (ML) algorithms, constantly detect drift in information and algorithms, and monitor each the provenance of knowledge and the id of those that prepare algorithms.

Ideas of AI ethics

There is not one set of outlined rules of AI ethics that somebody can observe when implementing an AI system. Some rules, for instance, observe the Belmont Report's instance. The Belmont Report was a 1979 paper created by the Nationwide Fee for the Safety of Human Topics of Biomedical and Behavioral Analysis. It referenced the next three guiding rules for the moral therapy of human topics:

- Respect for individuals. This acknowledges the autonomy of people and pertains to the thought of knowledgeable consent.

- Beneficence. This pertains to the thought of do no hurt.

- Justice. This precept focuses on equity and equality.

Though the Belmont Report was initially developed relating to human analysis, it has just lately been utilized to AI growth by tutorial researchers and coverage institutes. The primary level pertains to the consent and transparency regarding particular person rights when participating with AI programs. The second level pertains to making certain that AI programs do not trigger hurt, like amplifying biases. And the third level pertains to how entry to AI is distributed equally.

Different widespread concepts with regards to AI ethics rules embrace the next:

- Transparency and accountability.

- Human-focused growth and use.

- Safety.

- Sustainability and influence.

Why are AI ethics vital?

AI is a know-how designed by people to copy, increase or exchange human intelligence. These instruments usually depend on giant volumes of assorted sorts of information to develop insights. Poorly designed tasks constructed on information that is defective, insufficient or biased can have unintended, doubtlessly dangerous penalties. Furthermore, the speedy development in algorithmic programs signifies that, in some instances, it is not clear how the AI reached its conclusions, so people are basically counting on programs they cannot clarify to make selections that would have an effect on society.

An AI ethics framework is vital as a result of it highlights the dangers and advantages of AI instruments and establishes tips for his or her accountable use. Developing with a system of ethical tenets and strategies for utilizing AI responsibly requires the business and events to look at main social points and, in the end, the query of what makes us human.

What are the moral challenges of AI?

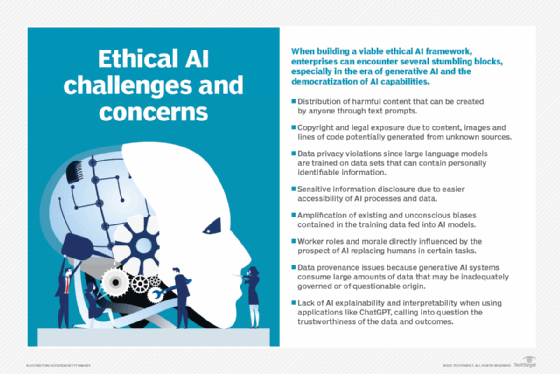

Enterprises face the next moral challenges of their use of AI applied sciences:

- Explainability. When AI programs go awry, groups should be capable of hint by means of a posh chain of algorithmic programs and information processes to search out out why. Organizations utilizing AI ought to be capable of clarify the supply information, ensuing information, what their algorithms do and why they're doing that. "AI must have a powerful diploma of traceability to make sure that, if harms come up, they are often traced again to the trigger," mentioned Adam Wisniewski, CTO and co-founder of AI Clearing.

- Accountability. Society continues to be finding out duty when selections made by AI programs have catastrophic penalties, together with lack of capital, well being or life. The method of addressing accountability for the results of AI-based selections ought to contain a variety of stakeholders, together with legal professionals, regulators, AI builders, ethics our bodies and residents. One problem is discovering the suitable steadiness in instances the place an AI system is likely to be safer than the human exercise it is duplicating however nonetheless causes issues, corresponding to weighing the deserves of autonomous driving programs that trigger fatalities however far lower than folks do.

- Equity. In information units involving personally identifiable info, it is extraordinarily vital to make sure that there are not any biases when it comes to race, gender or ethnicity.

- Ethics. AI algorithms can be utilized for functions aside from these for which they have been created. For instance, folks may use the know-how to make deepfakes of others or to govern others by spreading misinformation. Wisniewski mentioned these situations needs to be analyzed on the design stage to reduce the dangers and introduce security measures to cut back the opposed results in such instances.

- Privateness. As a result of AI takes large quantities of knowledge to coach correctly, some corporations are utilizing publicly going through information, corresponding to information from net boards or social media posts, for coaching. Different corporations like Fb, Google and Adobe have additionally added into their insurance policies that they will take person information to coach their AI fashions, resulting in controversies in defending person information.

- Job displacement. As a substitute of utilizing AI to assist make human jobs simpler, some organizations may select to make use of AI instruments to interchange human jobs altogether. This follow has been met with controversy, because it straight impacts those that it replaces, and AI programs are nonetheless liable to hallucinations and different imperfections.

- Environmental. AI fashions require lots of power to coach and run, abandoning a big carbon footprint. If the electrical energy it makes use of is generated primarily from coal or fossil fuels, it causes much more air pollution.

The general public launch and speedy adoption of generative AI purposes, corresponding to ChatGPT and Dall-E, that are skilled on current information to generate new content material, amplify the moral points associated to AI, introducing dangers associated to misinformation, plagiarism, copyright infringement and dangerous content material.

What are the advantages of moral AI?

The speedy acceleration in AI adoption throughout companies has coincided with -- and, in lots of instances, helped gasoline -- two main traits: the rise of customer-centricity and the rise in social activism.

"Companies are rewarded not just for offering customized services, but additionally for upholding buyer values and doing good for the society during which they function," mentioned Sudhir Jha, CEO at Brighterion and govt vp at Mastercard.

AI performs an enormous position in how customers work together with and understand a model. Accountable use is critical to make sure a optimistic influence. Along with customers, workers need to be ok with the companies they work for. "Accountable AI can go a good distance in retaining expertise and making certain easy execution of an organization's operations," Jha mentioned.

What's an AI code of ethics?

A proactive strategy to making sure moral AI requires addressing the next three key areas, in response to Jason Shepherd, CEO at Atym:

- Coverage. This consists of creating the suitable framework for driving standardization and establishing laws. Paperwork just like the Asilomar AI Ideas may be helpful when beginning the dialog. Authorities businesses within the U.S., Europe and elsewhere have launched efforts to make sure moral AI, and a raft of requirements, instruments and strategies from analysis our bodies, distributors and tutorial establishments can be found to assist organizations craft AI coverage. See the "Assets for creating moral AI" part beneath. Moral AI insurance policies want to handle learn how to cope with authorized points when one thing goes incorrect. Corporations ought to think about incorporating AI insurance policies into their very own codes of conduct. However effectiveness is dependent upon workers following the foundations, which could not at all times be real looking when cash or status are on the road.

- Schooling. Executives, information scientists, frontline workers and customers all want to know insurance policies, key concerns and potential adverse results of unethical AI and pretend information. One massive concern is the tradeoff between ease of use round information sharing and AI automation and the potential adverse repercussions of oversharing or opposed automation. "Finally, customers' willingness to proactively take management of their information and take note of potential threats enabled by AI is a posh equation primarily based on a mixture of prompt gratification, worth, notion and threat," Shepherd mentioned.

- Know-how. Executives additionally have to architect AI programs to mechanically detect faux information and unethical conduct. This requires not simply an organization's personal AI, however vetting suppliers and companions for the malicious use of AI. Examples embrace the deployment of deepfake movies and textual content to undermine a competitor or using AI to launch refined cyberattacks. This may turn out to be extra of a problem as AI instruments turn out to be commoditized. To fight this potential snowball impact, organizations should spend money on defensive measures rooted in open, clear and trusted AI infrastructure. Shepherd believes it will give rise to the adoption of belief that gives a system-level strategy to automating privateness assurance, making certain information confidence and detecting unethical use of AI.

Examples of AI codes of ethics

An AI code of ethics can spell out the rules and supply the motivation that drives applicable conduct. For instance, the next summarizes Mastercard's AI code of ethics:

- An moral AI system have to be inclusive, explainable, optimistic in nature and use information responsibly.

- An inclusive AI system is unbiased. AI fashions require coaching with giant and numerous information units to assist mitigate bias. It additionally requires continued consideration and tuning to alter any problematic attributes discovered within the coaching course of.

- An explainable AI system needs to be manageable to make sure corporations can ethically implement it. Methods needs to be clear and explainable.

- An AI system ought to have and keep a optimistic goal. In lots of instances, this might be accomplished to help people of their work, for instance. AI programs needs to be carried out in a means to allow them to't be used for adverse functions.

- Moral AI programs must also abide by information privateness rights. Regardless that AI programs usually require an enormous quantity of knowledge to coach, corporations should not sacrifice their customers' or different people' information privateness rights to take action.

Corporations corresponding to Mastercard, Salesforce and Lenovo have dedicated to signing a voluntary AI code of conduct, which implies they're dedicated to the accountable growth and administration of generative AI programs. The code is predicated on the event of a Canadian code of ethics relating to generative AI, in addition to enter gathered from a set of stakeholders.

Google additionally has its personal guiding AI rules, which emphasize AI's accountability, potential advantages, equity, privateness, security, social and different human values.

Assets for creating moral AI

The next is a sampling of the rising variety of organizations, policymakers and regulatory requirements centered on creating moral AI practices:

- AI Now Institute. The institute focuses on the social implications of AI and coverage analysis in accountable AI. Analysis areas embrace algorithmic accountability, antitrust issues, biometrics, employee information rights, large-scale AI fashions and privateness. The "AI Now 2023 Panorama: Confronting Tech Energy" report offers a deep dive into many moral points that may be useful in creating moral AI insurance policies.

- Berkman Klein Heart for Web & Society at Harvard College. The middle fosters analysis into the large questions associated to the ethics and governance of AI. Analysis supported by the middle has tackled matters that embrace info high quality, algorithms in felony justice, growth of AI governance frameworks and algorithmic accountability.

- CEN-CENELEC Joint Technical Committee 21 Synthetic Intelligence. JTC 21 is an ongoing European Union (EU) initiative for numerous accountable AI requirements. The group produces requirements for the European market and informs EU laws, insurance policies and values. It additionally specifies technical necessities for characterizing transparency, robustness and accuracy in AI programs.

- Institute for Know-how, Ethics and Tradition Handbook. The ITEC Handbook is a collaborative effort between Santa Clara College's Markkula Heart for Utilized Ethics and the Vatican to develop a sensible, incremental roadmap for know-how ethics. The ITEC Handbook features a five-stage maturity mannequin with particular, measurable steps that enterprises can take at every degree of maturity. It additionally promotes an operational strategy for implementing ethics as an ongoing follow, akin to DevSecOps for ethics. The core thought is to carry authorized, technical and enterprise groups collectively throughout moral AI's early levels to root out the bugs at a time when they are much cheaper to repair than after accountable AI deployment.

- ISO/IEC 23894:2023 IT-AI-Steerage on threat administration. The Worldwide Group for Standardization and Worldwide Electrotechnical Fee describe how a company can handle dangers particularly associated to AI. The steering can assist standardize the technical language characterizing underlying rules and the way they apply to creating, provisioning or providing AI programs. It additionally covers insurance policies, procedures and practices for assessing, treating, monitoring, reviewing and recording threat. It is extremely technical and oriented towards engineers relatively than enterprise consultants.

- NIST AI Danger Administration Framework. This information from the Nationwide Institute of Requirements and Know-how for presidency businesses and the non-public sector focuses on managing new AI dangers and selling accountable AI. Abhishek Gupta, founder and principal researcher on the Montreal AI Ethics Institute, pointed to the depth of the NIST framework, particularly its specificity in implementing controls and insurance policies to raised govern AI programs inside completely different organizational contexts.

- Nvidia NeMo Guardrails. This toolkit offers a versatile interface for outlining particular behavioral rails that bots have to observe. It helps the Colang modeling language. One chief information scientist mentioned his firm makes use of the open supply toolkit to stop a assist chatbot on a lawyer's web site from offering solutions that is likely to be construed as authorized recommendation.

- Stanford Institute for Human-Centered Synthetic Intelligence. The mission of HAI is to offer ongoing analysis and steering into finest practices for human-centered AI. One early initiative in collaboration with Stanford Drugs is "Accountable AI for Secure and Equitable Well being," which addresses moral and questions of safety surrounding AI in well being and medication.

- "In the direction of Unified Targets for Self-Reflective AI." Authored by Matthias Samwald, Robert Praas and Konstantin Hebenstreit, this paper takes a Socratic strategy to determine underlying assumptions, contradictions and errors by means of dialogue and questioning about truthfulness, transparency, robustness and alignment of moral rules. One purpose is to develop AI metasystems during which two or extra element AI fashions complement, critique and enhance their mutual efficiency.

- World Financial Discussion board's "The Presidio Suggestions on Accountable Generative AI." These 30 action-oriented suggestions assist to navigate AI complexities and harness its potential ethically. This white paper additionally consists of sections on accountable growth and launch of generative AI, open innovation, worldwide collaboration and social progress.

These organizations present a variety of sources that function a stable basis for creating moral AI practices. Utilizing these as a framework can assist information organizations to make sure they develop and use AI in an moral means.

The way forward for moral AI

Some argue that an AI code of ethics can rapidly turn out to be outdated and {that a} extra proactive strategy is required to adapt to a quickly evolving discipline. Arijit Sengupta, founder and CEO of Aible, an AI growth platform, mentioned, "The elemental drawback with an AI code of ethics is that it is reactive, not proactive. We are inclined to outline issues like bias and go searching for bias and making an attempt to get rid of it -- as if that is doable."

A reactive strategy can have bother coping with bias embedded within the information. For instance, if ladies have not traditionally obtained loans on the applicable fee, that will get woven into the information in a number of methods. "If you happen to take away variables associated to gender, AI will simply choose up different variables that function a proxy for gender," Sengupta mentioned.

He believes the way forward for moral AI wants to speak about defining equity and societal norms. So, for instance, at a lending financial institution, administration and AI groups would want to resolve whether or not they need to goal for equal consideration, corresponding to loans processed at an equal fee for all races; proportional outcomes, the place the success fee for every race is comparatively equal; or equal influence, which ensures a proportional quantity of loans goes to every race. The main focus must be on a tenet relatively than on one thing to keep away from, Sengupta argued.

Most individuals agree that it is simpler and simpler to show kids what their guiding rules needs to be relatively than to listing out each doable choice they could encounter and inform them what to do and what to not do. "That is the strategy we're taking with AI ethics," Sengupta mentioned. "We're telling a baby all the pieces they will and can't do as a substitute of offering guiding rules after which permitting them to determine it out for themselves."

For now, people develop guidelines and applied sciences that promote accountable AI. Shepherd mentioned this consists of programming merchandise and presents that defend human pursuits and are not biased in opposition to sure teams, corresponding to minorities, these with particular wants and the poor. The latter is very regarding, as AI has the potential to spur large social and financial warfare, furthering the divide between those that can afford know-how -- together with human augmentation -- and those that cannot.

Society additionally urgently must plan for the unethical use of AI by dangerous actors. Right now's AI programs vary from fancy guidelines engines to ML fashions that automate easy duties to generative AI programs that mimic human intelligence. "It is likely to be a long time earlier than extra sentient AIs start to emerge that may automate their very own unethical conduct at a scale that people would not be capable of sustain with," Shepherd mentioned. However, given the speedy evolution of AI, now's the time to develop the guardrails to stop this situation.

The longer term may see a rise in lawsuits, such because the one The New York Instances introduced in opposition to OpenAI and Microsoft in relation to using Instances articles as AI coaching information. OpenAI has additionally discovered itself in additional controversies that may affect the long run, corresponding to its consideration of shifting towards a for-profit enterprise mannequin. Elon Musk has additionally introduced an AI mannequin that sparked controversy in each the pictures it will probably create and the environmental and social influence of the bodily location during which it is being positioned. The way forward for AI may proceed to see this pushing of moral boundaries by AI corporations, in addition to pushback on moral, social and financial issues and a name for extra moral implementations by governments and residents.

The current developments of generative AI have additionally pushed the subject of learn how to implement these programs ethically. Study extra about AI ethics and generative AI.